‹ Back to all stories

The First Beta That Broke Us (So We Could Scale)

Ardit Berisha · Nov 20, 2025

The first beta didn’t look like a launch.

There was no polished rack of servers, no redundant everything, no clean “production” word anywhere in the stack. It was a simple XAMPP box, a low-bandwidth line with about 20 Mbps upload, and three people—Ardit, Elvis, and Driton—who wanted to see if an idea could breathe outside their own heads.

It was the first time Amaro Than was going to be seen by people who hadn’t watched it being built.

---

They didn’t start with the “real” setup on purpose.

“This is not production,” Ardit kept saying, half a warning, half a promise. “This is just to see how it feels.”

They invited a small circle. Trusted people. Friends, early believers, a few who only knew that “there’s this new app” and that it was somehow for them.

The rules were simple:

* Closed beta.

* Around twenty people.

* Try everything.

* Break it if you can.

* Tell the truth.

The XAMPP server hummed quietly in the background—Apache, PHP, MySQL, all running on a development machine that was never meant to hold a community. It was the kind of stack you use to try ideas, not to carry the weight of real users.

But that night, it had to do both.

---

At first, it was magic.

Logins worked. Profiles loaded. The first posts trickled in. A text here, a small image there. The feed moved. For a moment, the three of them could actually watch Amaro Than behave like a real place.

Messages started coming in.

“This looks different.”

“It feels clean.”

“I can’t believe this is built here.”

Every tiny reaction was a validation of months of design choices and arguments and rewrites. It was proof that this wasn’t just another app; it was a home being sketched into existence.

Ardit watched the logs scroll by, a quiet smile hiding behind concentration. CPU was fine. Memory steady. Database queries quick. Bandwidth… okay, maybe a bit tight, but still manageable.

Then someone tried to upload a video.

---

On the surface, nothing changed.

A progress bar appeared. The usual “uploading…” state. A second passed. Two. Five.

Behind the scenes, everything changed.

The XAMPP box, which had been handling light traffic without complaint, suddenly had to ingest a raw video file over a tiny 20 Mbps upload line. Disk writes spiked. PHP processes waited. The network queue started to swell.

The logs began to show small delays—at first a few hundred milliseconds, then seconds.

“Hey, my feed is not loading,” someone messaged.

“I tapped refresh and it’s stuck,” another wrote.

Within minutes, other testers felt it too. Timelines that had been snappy a moment ago were now sluggish. Buttons pressed but didn’t respond. Even simple pages like login and profile edits began to hesitate.

And then a second person tried to upload a video.

That’s when everything snapped.

---

With only five people truly active at the same time, the system did something that every builder dreads: it collapsed in slow motion.

The XAMPP server, designed for development, not load, started to choke. Threads piled up. Memory was eaten by PHP processes trying to juggle big requests. The single MySQL instance tried to serve reads and writes while the disk was busy swallowing giant raw files.

Apache didn’t fall over all at once. It staggered.

Some requests would still go through—after a long wait. Others would time out. Some users saw blank pages. Others saw the spinner spin forever.

To testers, it felt simple: “The app crashed.”

To the three of them, watching in real time, it felt like an X-ray of their entire architecture.

This is what happens, Ardit thought, when you put real weight on a structure built for experiments.

He closed his eyes for a second, then did what he always does: he stopped blaming the server and started looking for the pattern.

---

The numbers told a very clear story.

CPU: fine at low concurrency, then spiking exactly when video uploads began.

Disk: thrashing during large file writes.

Network: uplink completely swallowed by a single user sending a big video.

Database: overloaded not because of bad queries, but because everything else around it was stuck.

It wasn’t text posts. It wasn’t likes. It wasn’t even images.

It was video.

One user, one upload, one small home connection, and all of Amaro Than felt it.

XAMPP itself was a known limitation. Ardit never believed it could carry production. What he hadn’t fully seen—until that night—was how aggressively uncompressed media could poison the experience for everyone.

On paper, it was obvious: big files on a narrow pipe are a problem.

In practice, it’s different to watch a single upload freeze an entire app while ten people in a closed beta stare at their phones and wonder if this is “just another broken idea.”

That was the moment something clicked.

If one person’s video could bring a small test environment to its knees, what would happen when it was hundreds? Thousands? Tens of thousands?

They didn’t need a slightly better XAMPP setup. They needed a different way of thinking.

---

After they stopped the test and apologized to the group, the three of them reset.

Elvis looked at the server stats and cost charts.

Driton replayed the user experience in his mind: the moment things went from “wow” to “wait, is it broken?”

Ardit stared at the metrics that mattered most to him—latency, throughput, failure rate—and saw a pattern he couldn’t ignore.

“We’re not just building a social app,” he said. “We’re building a streaming platform disguised as a social app.”

Because for Roma youth, the point wasn’t just to post text. It was to show life: dance, music, street, family, everyday moments. That meant video. A lot of it.

If video was at the heart of Amaro Than, then the architecture couldn’t treat it as an afterthought. It had to be the thing the whole system was designed around.

They didn’t just need a stronger server.

They needed a brain.

---

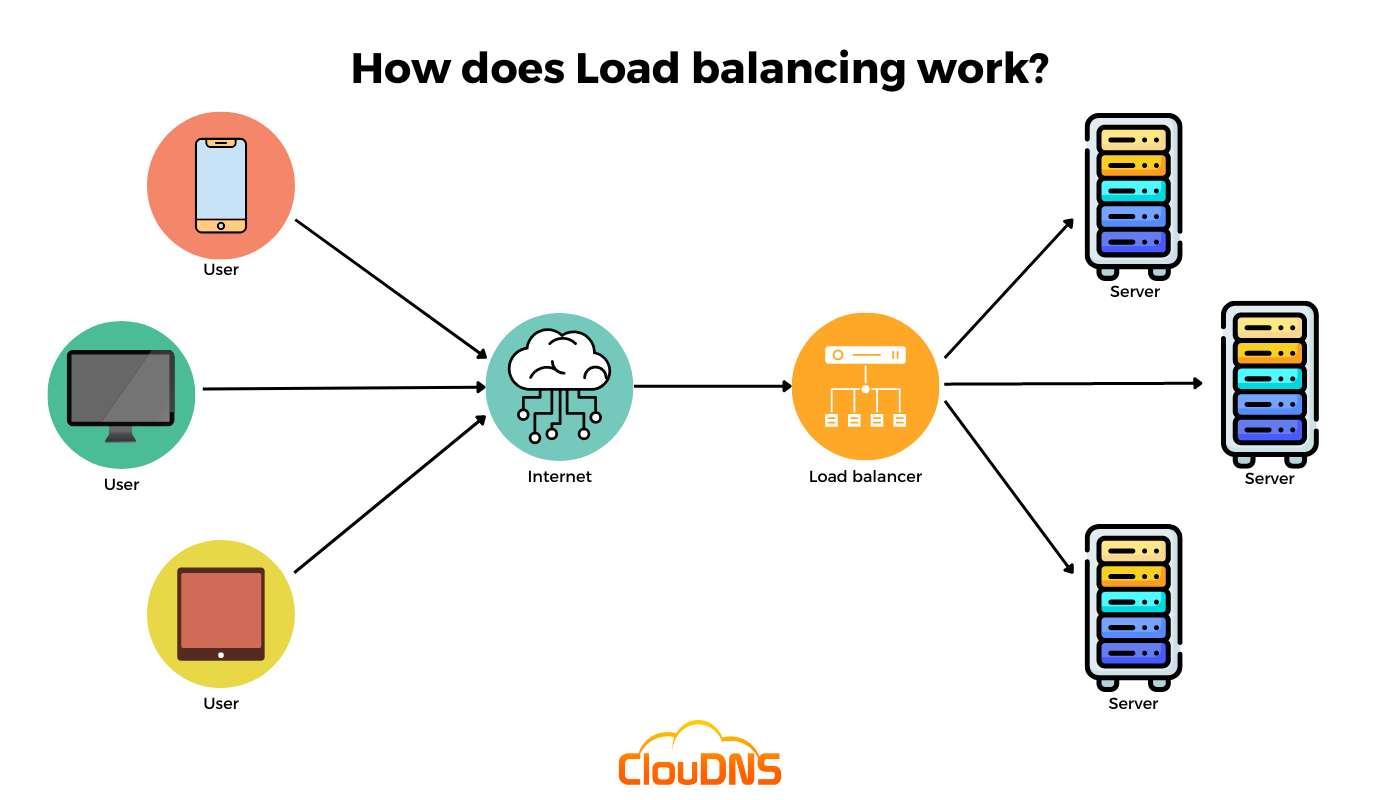

The first piece of that brain was an idea Ardit had been circling for weeks: an optimization algorithm that understood media, users, and infrastructure all at once.

Not just “compress this file”. Anyone could do that.

He wanted something that would look at each upload and ask:

* Where is this user?

* How fast is their connection—really, not just theoretically?

* What device are they on?

* What does this video need to be to feel good, not just look good?

* How can we transform it so that it’s light enough for the network but rich enough for the story?

That idea became the seed of NARA-1r.

At first, NARA-1r wasn’t a full AI model with a name and a personality. It was a set of rules, experiments, and scripts Ardit hacked together to make sure the next beta wouldn’t die at five concurrent users.

But bit by bit, it grew into something else: a streaming brain that sat behind Amaro Than and quietly made decisions about how heavy each piece of content was allowed to be.

Raw uploads would come in. NARA-1r would analyze them, adapt them, and prepare them for a world that didn’t care about perfect quality as much as it cared about “it just works.”

---

The optimization algorithm started with the basics:

* Limit resolution where it didn’t matter.

* Normalize framerates so weird source files didn’t explode CPU usage.

* Transcode into streaming-friendly formats.

* Generate multiple quality levels so the player could adapt on the fly.

* Create thumbnails and previews that loaded almost instantly.

But that was only the start.

Ardit didn’t want a static pipeline. He wanted something that could learn from traffic over time.

If certain devices struggled with a specific bitrate, NARA-1r would adapt future outputs.

If certain regions had consistently weak upload speeds, the algorithm would be more aggressive there, prioritizing reliability over pixels.

If specific patterns of usage (late-night watching, heavy weekend posting) were pushing bandwidth to the edge, it would pre-optimize and pre-cache content to smooth out the spikes.

The goal was simple, but ambitious: no single video should ever be able to slow down the entire platform again.

---

But media optimization alone wasn’t enough.

The first beta had also revealed another truth: HTTP alone wasn’t the right tool for real-time feelings.

During those first tests, every like, comment, and presence change had been a separate request, stateless and a little bit blind. Under load, they queued up behind heavier tasks, and the app felt sluggish and unpredictable.

So Ardit and the team started to rewire the way Amaro Than talked to itself.

They moved live presence, typing indicators, and fast interactions to WebSockets—persistent connections that could carry thousands of tiny signals without drowning in overhead. What had been a series of disconnected calls became a continuous conversation.

The feed itself learned a new rhythm. Heavy operations like video processing were pushed into background jobs, so the user didn’t pay the price up front. Posting became:

1. Accept quickly.

2. Hand off to the optimization brain.

3. Update the feed as soon as the story is “good enough” to appear.

4. Upgrade quality silently in the background if needed.

Meanwhile, the caching system was redesigned from scratch.

Instead of treating every request like a fresh question, Amaro Than learned to remember what people asked for—and where they asked from.

Popular stories were kept close to users. Edge caches and local caches worked together, serving content at speeds the original XAMPP box could never dream of. The database was no longer the first stop; it became the source of truth behind several smarter layers.

The result was subtle but powerful: users were now mostly talking to cached, optimized, tested paths. The fragile parts were hidden behind strong ones.

---

When they ran the next internal test, the setup was very different.

No more XAMPP carrying everything on its back. There was a tuned Linux server. Real web server configuration. Background workers. WebSockets humming quietly. And, in the middle of it all, NARA-1r watching the media.

They invited another small group. Some from the first test. Some new.

“Do exactly what broke it last time,” Ardit told them.

They did.

Videos. Multiple uploads at once. People scrubbing through timelines, scrolling, reacting, messaging, all at the same time.

The difference was obvious—not in the metrics (those came later), but in the silence.

Nobody asked, “Is it down?”

Nobody said, “It’s stuck again.”

People just used it.

On the dashboard, CPU rose, but gracefully. Disk writes spiked, but stayed controlled. Network looked busy, not panicked. The caching layer lit up: hits, hits, hits. Background jobs picked up raw files, passed them through NARA-1r, and delivered lighter, smarter versions back to the platform.

For the first time, the app behaved the way they had always imagined it: calm on the outside, busy and intelligent on the inside.

---

Looking back, that first beta wasn’t a failure.

It was a mirror.

It showed them exactly what would have happened if they had shipped too early on a stack that wasn’t ready to carry real life. It took only five active users to prove that “it works on my machine” is not the same as “it works for our people.”

The crash made something very clear:

* Amaro Than couldn’t be just another app that falls apart under weight.

* It had to be a platform that expects weight—videos, traffic, stories, entire communities—and treats it as normal.

NARA-1r, the optimization algorithm, the WebSockets, the new caching system—none of these were shiny features on a landing page. They were the quiet results of that night when the test server collapsed under five people and one raw video.

Elvis saw it as risk management: reduce the chances that infrastructure would embarrass the mission.

Driton saw it as respect: if you invite people into something and ask them to trust it, you owe them more than a fragile experience.

Ardit saw it as design: how it works when things go wrong is more important than how pretty it looks when everything is perfect.

---

Today, when someone opens Amaro Than and posts a video, they don’t think about any of this.

They don’t think about upload speed graphs, or buffer sizes, or adaptive bitrates. They don’t know that somewhere in the background, an engine named NARA-1r is reshaping their file into something the network can carry without dropping the whole app to its knees.

They just know that it goes through.

That the feed keeps moving.

That their friends can watch.

That the app doesn’t crash when the moment matters most.

And somewhere, buried deep in the logs and in the architecture, there’s a memory of that first beta: the day five people crashed a development server and forced Amaro Than to grow up.

Because that was the night they realized something simple and powerful:

If we want this to be “our place,” it has to stand even when everything around it shakes.

So they built a streaming brain, an optimization algorithm, and a caching system that treats every video like it could be the one that breaks the world—and then makes sure it doesn’t.

That’s the real story of the first beta.

Not that it failed.

But that it taught Ardit, Elvis, and Driton exactly what Amaro Than needed to become so it would never fail the same way again.

Featured

Featured